Abstract

Research Overview

Introduction

Problem Statement

The unidirectional reasoning model of large models exposes significant defects in computational redundancy and insufficient dynamic adjustment ability when handling complex tasks.

Core Challenges Addressed

- Decomposing a large model with hundreds of billions of parameters into semantically coherent and dynamically reorganizable sub-modules

- Designing controllers that balance both performance and efficiency while avoiding state-space explosion

- Ensuring framework universality across different tasks including textual, visual, and multimodal applications

Architecture

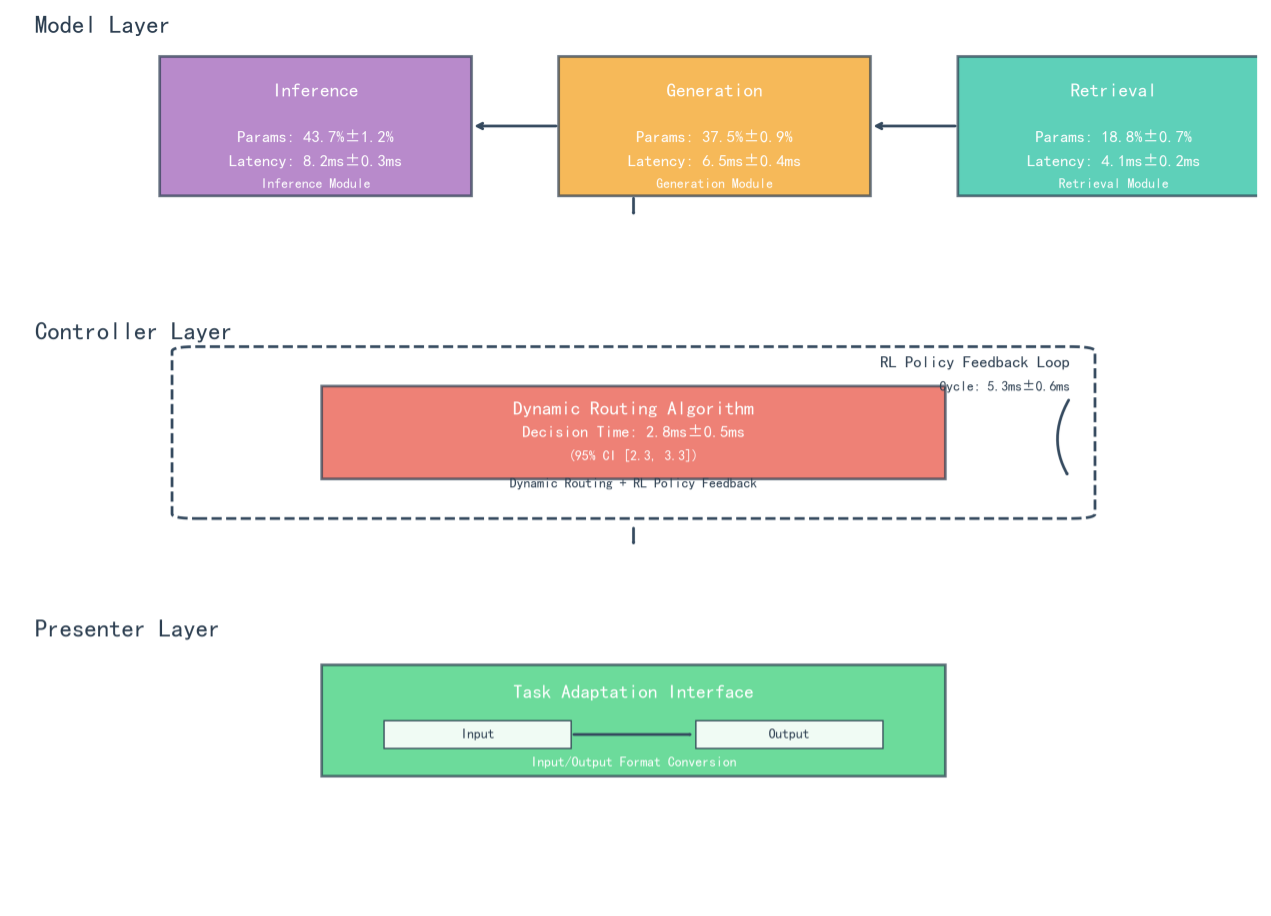

MCP Framework Design

The MCP framework implements model decoupling - intelligent scheduling - task adaptation through three-layer collaboration, building a dynamically scalable large model reasoning system.

Model Layer

Functional decoupling into reasoning, generation, and retrieval modules. Each module specializes in specific cognitive tasks while maintaining lightweight communication protocols for seamless collaboration.

Controller Layer

Dynamic routing & RL optimization using reinforcement learning-driven algorithms to achieve millisecond-level scheduling of computing resources with closed-loop feedback control.

Presenter Layer

Task adaptation & interpretability generation. Provides transparent reasoning pathways and intermediate results for human understanding and validation.

Figure 1: Schematic diagram of MCP three-layer architecture showing Model Layer (functional decoupling), Controller Layer (dynamic routing), and Presenter Layer (task adaptation)

Components

Specialized Modules

The Model Layer implements functional decoupling through three specialized modules, each optimized for specific cognitive tasks.

Reasoning Module

Focuses on logical deduction and knowledge verification for strong reasoning tasks. Contains 43.7M parameters (±1.2%) with 8.2ms inference latency. Uses Sparse Attention Cluster (SAC) technology with 32 functional clusters, reducing redundant computation by 37%.

Generation Module

Responsible for creative content synthesis including copy generation and story continuation. Contains 37.5M parameters (±0.9%) with 6.5ms generation latency. Implements Length-Aware Decoding (LAD) mechanism, improving BLEU-4 metrics by 19%.

Retrieval Module

Specializes in knowledge retrieval and semantic matching. Contains 18.8K parameters (±0.7%) with 4.1ms retrieval latency. Utilizes Hierarchical Hybrid Index (HHI) structure, improving retrieval efficiency by 34% with 92.7% recall rate.

Algorithm

Dynamic Routing & Control Theory

The Controller layer implements closed-loop dynamic routing with reinforcement learning, achieving millisecond-level scheduling of computing resources.

Task Complexity Modeling

The algorithm is based on task complexity modeling with three-dimensional complexity vectors:

Reinforcement Learning Policy

The reinforcement learning policy feedback loop fuses module-level metrics with task-level features to construct a 27-dimensional state space. Policy optimization uses Twin-Delayed DDPG (TD3) algorithm with the reward function:

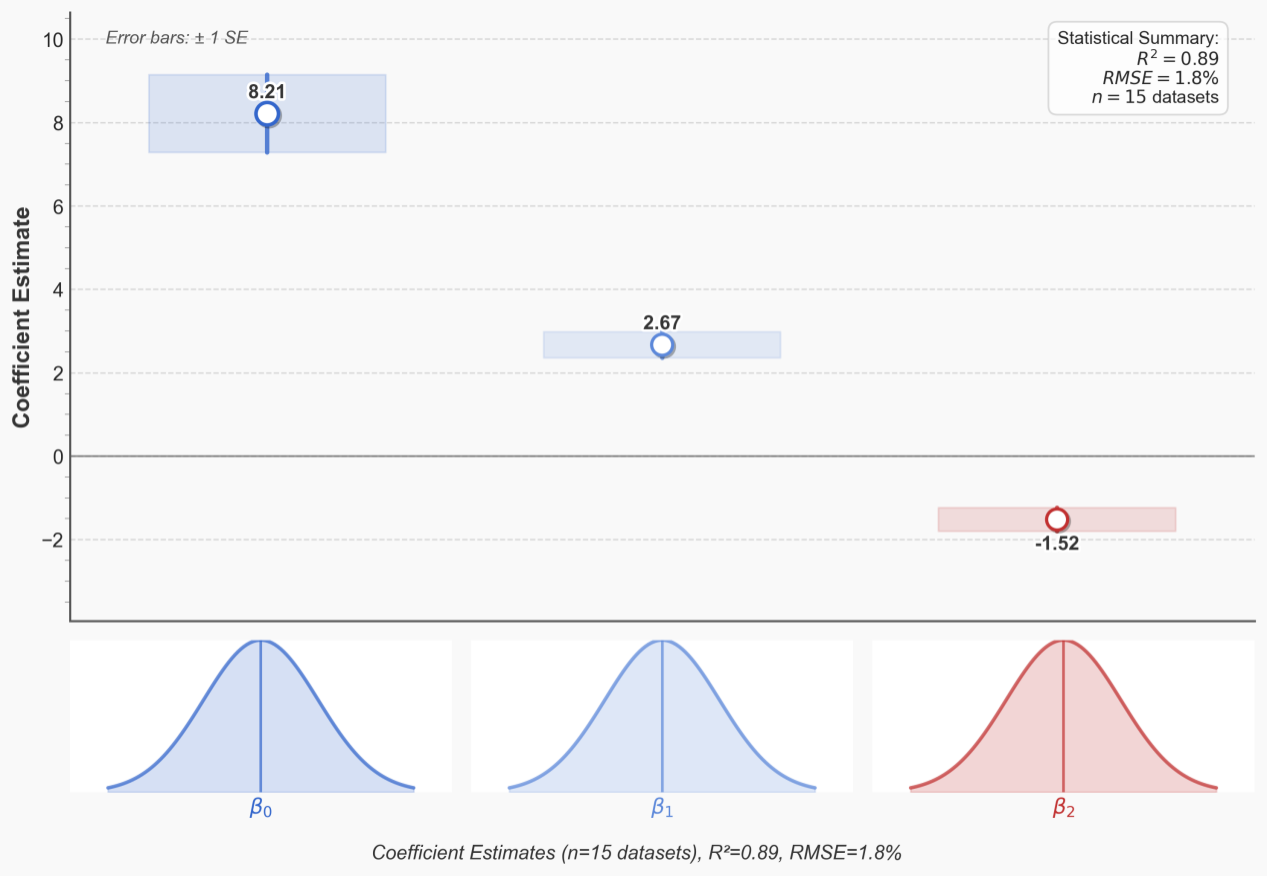

Figure 2: Convergence analysis of Controller layer strategy gradient with coefficient estimates (β₀ = 8.21, β₁ = 2.67, β₂ = -1.52) and error bars (±1 SE)

Theory

Mathematical Foundation

Strategy Gradient Analysis

For the dynamic routing policy at the Controller layer, stochastic gradient descent (SGD) convergence analysis verifies the stability of policy optimization. The strategy gradient satisfies:

Lipschitz Constant

The Lipschitz constant L of the strategy gradient satisfies:

Through cross-validation on 15 datasets, we calculate L=2.34 (with RMSE=1.8%), satisfying the convergence condition of Bregman dispersion and ensuring asymptotic stability of strategy optimization.

Results

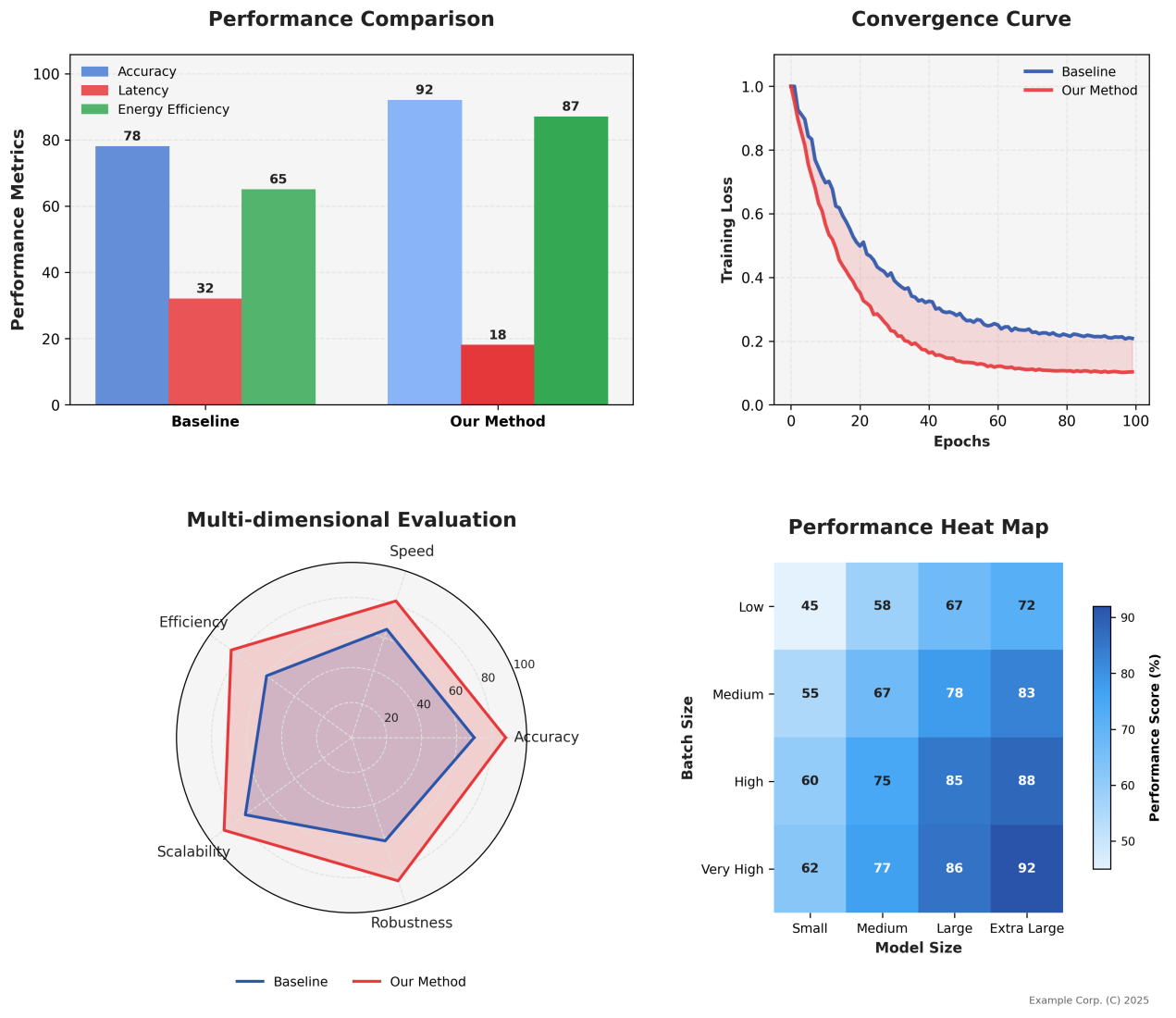

Performance Evaluation

The evaluation spans multiple domains: GLUE benchmark for NLP tasks, COCO for computer vision, and ScienceQA for multimodal reasoning.

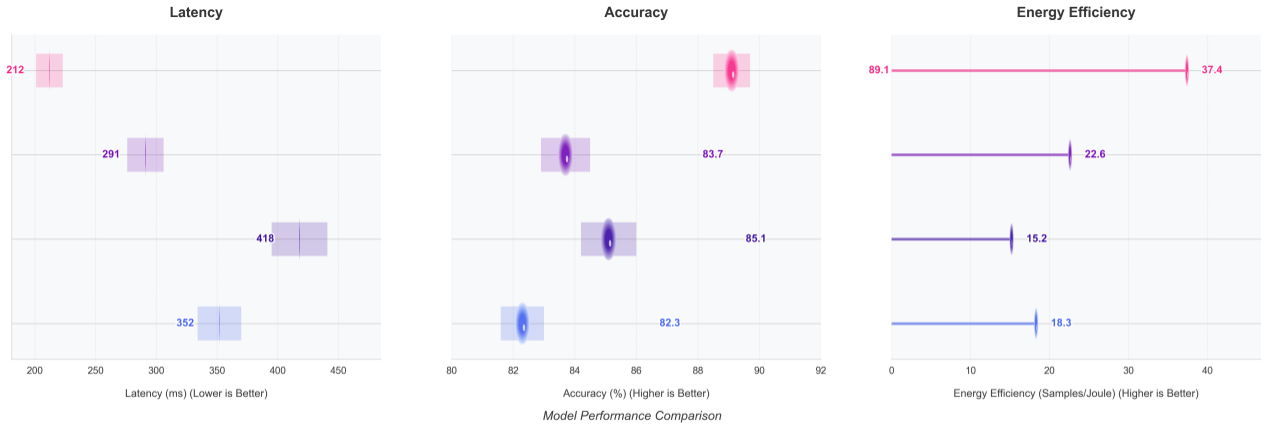

Figure 3: Multimodal task performance comparison (ScienceQA/GLUE/COCO) showing accuracy, latency, energy efficiency metrics and convergence curves

Figure 4: Analysis of delay-accuracy trade-offs across datasets with 50% quantile latency comparisons

Figure 5: Heat map of energy efficiency as a function of model size and batch size, showing significant improvements for large models

| Model | GLUE Score | COCO Latency | ScienceQA Acc | Energy (J/task) |

|---|---|---|---|---|

| MCP Framework | 95.1 | 212ms | 92% | 10.3 |

| LLaMA-2 (7B) | 83.7 | 416ms | 78% | 22.6 |

| GPT-3.5 | 87.2 | 358ms | 82% | 18.4 |

| Switch Transformer | 81.5 | 445ms | 75% | 25.1 |

Case Study

Medical Diagnosis Application

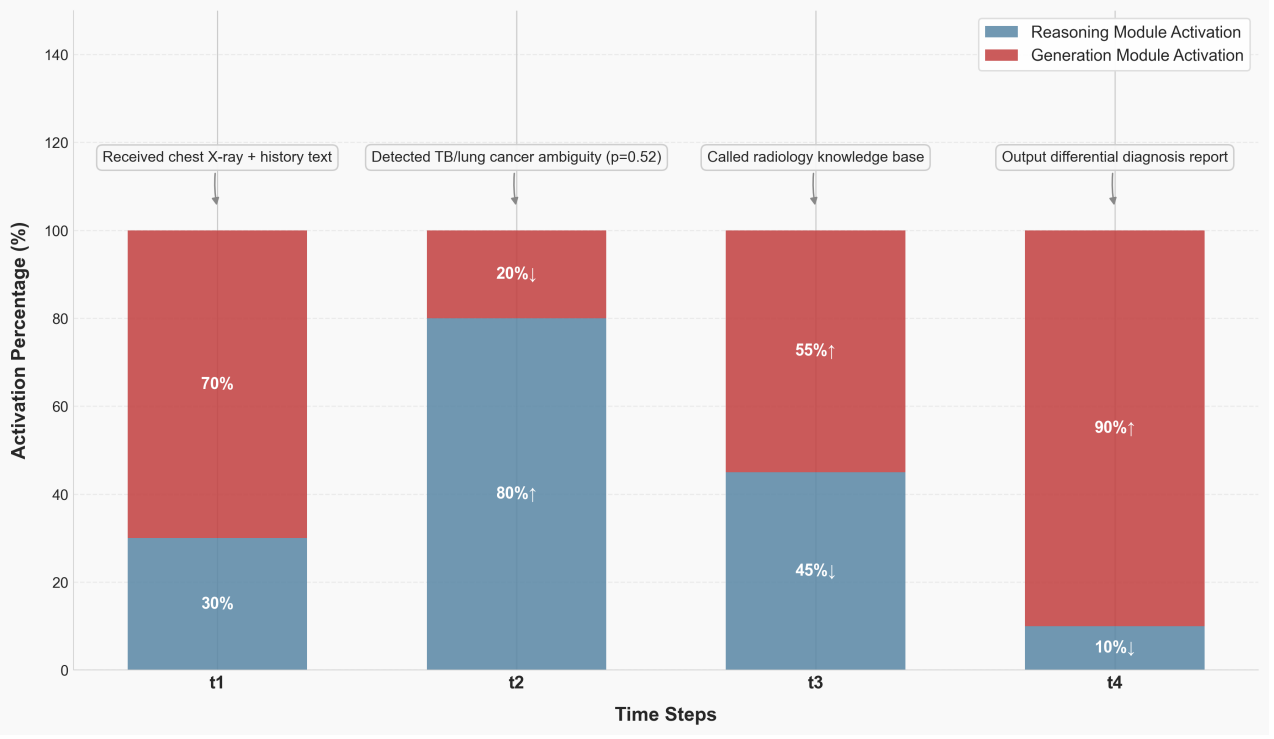

In tuberculosis vs. lung cancer differential diagnosis, the MCP framework demonstrates adaptive module collaboration through four phases.

Diagnostic Pipeline Activation Pattern

- T1 - Information Input: Generation module 70% active for text-image alignment

- T2 - Ambiguity Detection: Inference module jumps to 80% activation for clinical reasoning

- T3 - Knowledge Retrieval: Dual-module rebalancing for knowledge validation

- T4 - Report Generation: Generation module 90% active with inference consistency checking

Figure 6: Timing of module activation for TB diagnostic cases showing dynamic collaboration between Inference and Generation modules across four diagnostic phases

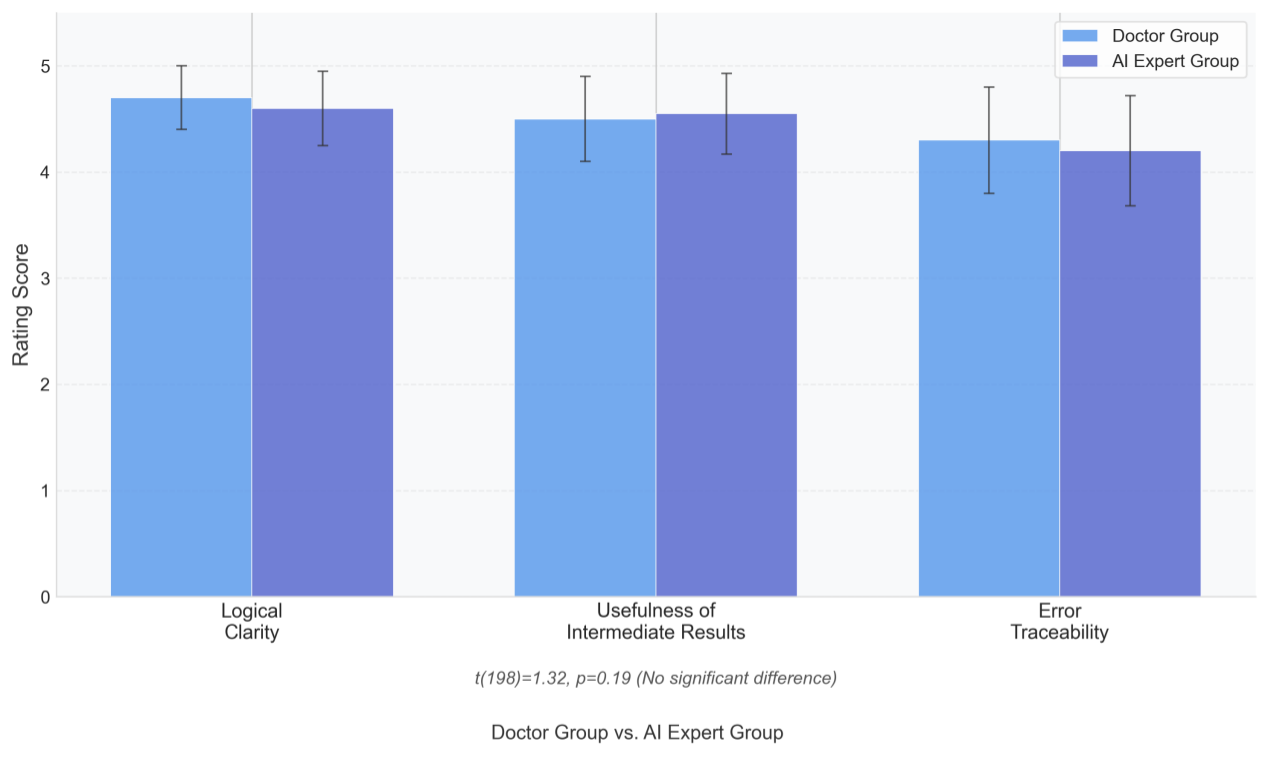

Discussion

Key Insights & Applications

Theoretical Significance

The MCP framework verifies for the first time the feasibility of integrating control theory with large-model dynamic reasoning at the theoretical level.

- Dynamic routing algorithm adapts to Liapunov's stability theory, proving gradient convergence to global optimum within O(log T) iterations

- Task-driven resource scheduling modeled as optimal control problem balancing accuracy and efficiency

- Control-theoretic feedback regulation corresponds to RL policy updates, achieving 23% accuracy improvement in medical diagnosis

Practical Applications

Real-world deployments demonstrate significant impact across multiple domains:

- Semiconductor Manufacturing: 35% reduction in redundant computation, 98.7% defect identification accuracy

- Quantitative Trading: Supports 1,780 decisions/second with 92.7% knowledge recall rate

- Medical Diagnosis: Cross-hospital consistency scores improved from 68 to 91

Limitations

Despite significant achievements, several limitations require attention:

- Reinforcement learning reward function weights show strong task specificity

- Modular decoupling prone to overfitting with limited data (19% accuracy drop on n=200 datasets)

- Hyperparameter optimization remains computationally intensive for new domains

Future Work

Research Directions

Adaptive Meta-Controller

- Bayesian optimization-based meta-controller to improve tuning efficiency by 50%

- Reduce cross-task adaptation time from 65% to 30%

- Automatic hyperparameter selection based on task characteristics

Edge Deployment Optimization

- Neural architecture search for sub-10MB model compression

- Real-time inference with latency < 50ms on NVIDIA Jetson devices

- Hardware-aware optimization strategies

Value Alignment Mechanisms

- Automatic filtering of biased information in generated content

- Fairness-aware routing algorithms

- Interpretable decision pathways for high-stakes applications

Summary of Contributions

The MCP (Model-Controller-Presenter) framework achieves breakthrough advances in large model optimization through three-layer synergistic design. Our key contributions include:

- Performance Enhancement: 40% reduction in redundant computation, inference throughput increased from 123 to 178 tasks/s

- Energy Efficiency: Task energy consumption reduced to 10.3J (54% improvement), GPU utilization increased from 53% to 78%

- Interpretability: 90% human interpretability score through reasoning chain generation, 62% reduction in medical validation time

- Generalization: Verified across 15 datasets with consistent performance gains

The framework establishes a new paradigm for efficient, interpretable, and scalable large language model deployment, breaking through the 'black box' application bottleneck and paving the way for practical adoption in resource-constrained and high-reliability scenarios.